Crowdrender and Denoising in Blender 2.79

- James

- Jun 28, 2017

- 6 min read

200 samples at 1080p frame size - glass bsdf shader on plate, diffuse bsdf on plane with checker texture

Initial Frustrations

Wouldn't it be nice if you could render using the denoiser in blender 2.79 and still be able to use multiple machines (for both single frames and sequences) without having artefacts where the tiles meet? Man I sounded like such a salesman there! Sorry :P

If you've never tried bucket rendering for single frames, you might not have experienced the blood boiling frustration of having artefacts in your final render - be they squiggles, fireflies or discontinuities. Getting rid of them is not exactly an exact science even when you're not splitting up a render into tiles. So when we had some artefacts whilst testing the denoiser with crowd render, for us it was certainly a show stopper, at first. I mean really, no denoiser support? Might as well pack up and go home.

Wait, what is a Crowdrender?

Ahhh, look at me asking questions like I am you reading this article! Sorry, a little too mainstream internet blog, thinking I am a clever clogs. Ahem, I'll get to the point.

If you haven't heard, crowd render is a network rendering add-on for blender, it allows you to connect many computers together to render stills and sequences. In this article we're exploring how it works with Blender's new (coming in 2.79) denoiser feature.

If you want the short story, it works, you can (in version 0.1.2 of our add-on, as yet unreased, just like 2.79) render frames using multiple computers and use the denoiser to get the same great results in less time.

If you love a little reading, then read on my fine fellow/fare lady.

Delving a little deeper

So, for those of you who love to read, here is a reference to an academic journal (its a paid one, so unfortunately you only get to read the abstract for free) behind the new denoiser.

If you like seeing results rather than indulging in theory, you can see the Adaptive Rendering based on Weighted Local Regression in action in a video presentation at the link below.

http://sglab.kaist.ac.kr/WLR/

Anyway, the denoiser basically works by doing a lot of data analysis of data in the image plane, thats pixel data as far as my limited understanding goes. The theory goes that you can sample the data in the image and remove noise and keep detail. We know this works cause, well look at the image, it convinced me (the video above is also amazing by the way).

The controls in blender give a hint at how it works, think of it as a very advanced blur, you get to control things like the radius and strength of the blur. The radius restricts what pixel data at any given location in the image is used to remove noise. The strength affects how strongly the data is weighted. Basic enough explanation, I guess.

In the image below you can see a render where we intentionally disabled denoising on one machine so you can see the difference. This image was rendered with 200 samples, you can see the clarity of the denoised image is quite striking.

The catch, with multi-tile rendering

We've come across a similar issue with compositing tiles coming from separate computers or processes. Initially we'd tried compositing each tile on the computers we were using as opposed to sending all the tiles back to the user's main computer (sometimes called a client). This resulted in artefacts along the edges of each tile (like the image below). Wherever two tiles meet, the result computed for each tile is different as it is missing some data from the other tiles it shares an edge with. So we had to send the tiles un-composited back to the client where they were compiled into a single image and then sent through the compositor.

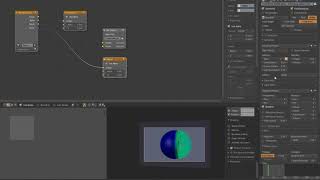

In the image below, initially we had the same issue as with compositing, there were artefacts. Though in this image, they're highly accentuated. What you are actually looking at is not a final render but a result from Blender's compositor. We simply took the images from two computers and then put them into a color mix node set to "difference".

What this does is to render pixels that are identical in overlapping areas of the two tiles as black. Those pixels which are not identical have a non zero (hence brighter) colour value. You can clearly see where the two tiles have been overlapped. Now we see what the denoiser is up to along the tile boundaries. Its a little hard to see but from this image you can see that the areas that overlap are fairly similar since they're dark, but there are brighter areas where the two images are different.

So, it appears the the sampling used in the denoiser results in a different value for pixels near a boundary or edge of the image frame. Pants. Not what we were hoping for, but logical now we realise that its doing something similar to a blur node, albeit way more sophisticated.

The solution, how to remove the artefacts without having to hack

Looking a little more closely at the problem, the solution materialised (as it does when you care to really have a good look at something ;P). On closer inspection we could see that the differences in pixel values between the two tiles were less in the centre of the overlap than along the edges. This made sense. If the denoiser is using a radial pattern to sample, then where that radius overlaps an edge, it will be missing data it would otherwise have and result in deviations from what we'd see if there wasn't a join.

We used the image tools in blender, specifically the "sample line" tool in the UV/Image editor to sample various locations of the overlap and confirmed that the data was consistently less different along the middle of the overlap. So, with a slight tweak to our code, we implemented overlapping the tiles that came from our render nodes. It turned out that if you set the overlap to be sufficiently larger than the radius in the denoiser, viola! The artefacts are now impossible to see with the eye as you can see below.

Conclusions

The work to remove the artefacts was definitely worth the effort, comparing how long it takes to get a similar result without the denoiser, anyone would agree that its a massive step in the right direction. As a comparison I rendered the image above with and without the denoiser. The image above (denoiser on, yeah I know, kinda obvious XD) rendered in under two minutes using 200 samples ( I was using a laptop and simulating two machines, so it would be much quicker on our test environment where we've got a handful of decent machines).

Right now I am using the same setup to render the same frame with no denoiser, currently the render time is at fifty minutes and counting, samples are at 1700-ish and this is the result. While its close, there is still way more noise in the shadows and up close its still very grainy.

I love the cycles engine, but, he main issue I find with it is that when grains (or fireflies whatever your favourite term, insert here) in the image are a problem, you face a law of rapidly diminishing returns as you increase samples.

The majority of convergence in the image occurs in the first few hundreds, perhaps even tens of samples, thereafter the image barely changes as the samples creep up. As I now flick back to blender to check on my render, we're at 2100 samples, and the image looks no different.

I think this is where the power of the denoiser comes in, though it is not without its own issues it seems. Being able to smooth out the remaining blemishes using the denoiser without the need for thousands of samples is a much needed boost.

However, the method employed by the denoiser is effectively a smart blur, meaning you will lose detail, if that detail is critical, you will need more samples, there is just no other way to create that data.

Rendering using the cycles engine is creating data, the denoiser is post processing it. So there will come a moment when the denoiser just won't help. For the keen eyed among you that moment in the images above came when I realised that the samples on the inside of the glass on the right hand side just weren't enough for the denoiser to work with and the artefacts remained. Checking the render that is still going strong (at nearly 2300 samples now), those same artefacts have faded.

Crowdrender and the denoiser in 2.79

We're right now closing in on integrating the overlapping mechanism with 0.1.2, hence the extended delay in the release. We really appreciated your patience with us (Jeremy would be nodding in approval if he were sat next to me right now). We know that its been a while since 0.1.1a and that you might be wondering what the holdup is (or you may have forgotten us entirely, arghh!).

We want to release 0.1.2 so that its useful and doesn't make you want to scream. So once Jeremy and I have stopped screaming at it, we'll hand it over. We're working hard to iron out a few issues the have been brought up with our esteemed pre-release testers, our thanks go out to them for helping us and for their patience with the bugs we're now fixing!

In the mean time, consider subscribing if you haven't already, cause its the best way to get notified when the 0.1.2 release is gonna bust out its cage..... I mean be released.

Take care and from us all God bless :D

Comments