New short produced with crowdrender

- James Crowther

- Jul 5, 2017

- 2 min read

Why do a short?

We wanted to prove that we could use crowdrender to produce an animation that looked great. It took us about a month, but finally we produced our first short using crowdrender. And it really is short, at only one minute long! Regardless of the length we really loved making it, and hope you enjoy watching (the link to the video is at the bottom of this article ;) ).

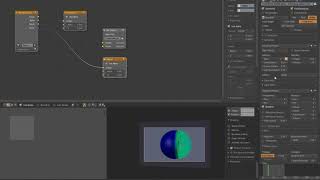

This short saw the crowd render add-on pass some important tests on the way to being released. We understand how important things like being able to have frames flow from the render engine through the compositor. Post processing is a key step in producing professional looking results and so we made this short to prove to ourselves that crowd render now works with the compositor for single frames and sequences.

Compositing frames rendered on other machines - tricky

This was a challenge to say the least. For a long time, it hasn't been easy to use frames that have been rendered on several machines in the compositor. Each frame has to be sent to a network attached storage drive (if you have one that is, if not, try dropbox :D ). If that all works, theres still a problem.

Blender's render engine API allows you to load an image file into blender as a render result, just as if Blender had rendered that image internally. This allows us to render frames on other computers and then combine them using the API to generate the whole image you see in Blender which is made up of the slices or tiles, rendered on all the computers used.

The problem is that the API only accepts files with four channels, RGB and A. So if you want to use, say, the emission pass, or ambient occlusion pass in the compositor, you are out of luck, they won't appear since they cannot be in the image file we load as a render result, if we do use files which store this information, Blender throws an error and Kaboom! You don't see the render and you can't use the image in the compositor either.

How we managed to do it

The solution to all this was to not load the render using the render engine API. This has the unfortunate side effect that you can't see the render result appear in the UV/Image editor as per normal, but you can then open the image or images (if you are doing an animation) in the compositor and see the render there. And you can use all the layers and passes that you want just like you normally do. Triumph!

This was all we needed to post process frames rendered on the four computers I have in my house. We were even able to go back and re-render some frames and have them seamlessly show up in the compositor as we scrubbed through the animation which really helped doing the post processing. All of this happening on the four computers we were working with which really made the whole process a lot faster.

Comments